Build reliable Go applications: Configuring Azure Cosmos DB Go SDK for real-world scenarios

When building applications that interact with databases, developers often need to customize the SDK behavior to address real-world challenges like network instability, performance bottlenecks, debugging complexity, monitoring requirements, and more. This is especially true when working with a massively scalable, cloud-native, distributed database like Azure Cosmos DB.

Introduction

When building applications that interact with databases, developers frequently encounter scenarios where default SDK configurations don’t align with their specific operational requirements. They need to customize SDK behavior to address real-world challenges like network instability, performance bottlenecks, debugging complexity, monitoring requirements, and more. These factors become even more pronounced when working with a massively scalable, cloud-native, distributed database like Azure Cosmos DB.

This blog post explores how to customize and configure the Go SDK for Azure Cosmos DB beyond its default settings, covering techniques for modifying client behavior, implementing custom policies, accessing operational metrics, etc. These enable developers to build more resilient applications, troubleshoot issues effectively, and gain deeper insights into their database interactions.

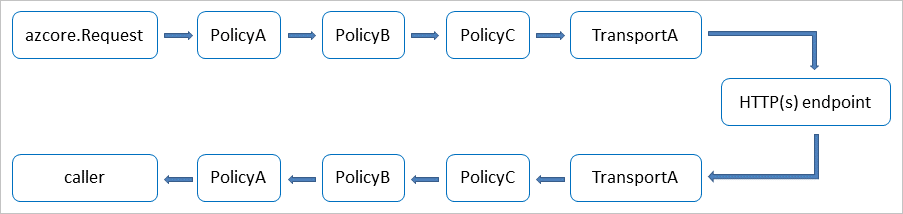

The Go SDK for Azure Cosmos DB is built on top of the core Azure Go SDK package, which implements several patterns that are applied throughout the SDK. The core SDK is designed to be quite customizable, and its configurations can be applied with the ClientOptions struct when creating a new Cosmos DB client object using NewClient (and other similar functions). If you peek inside the azcore.ClientOptions struct, you will notice that it has many options for configuring the HTTP client, retry policies, timeouts, and other settings.

Lets dive into how to make use of (and extend) these common options when building Go applications with Azure Cosmos DB.

I have provided code snippets throughout this blog. Refer to this GitHub repository for runnable examples.

Retry policies

Common retry scenarios are handled in the SDK. Here is a summary of errors for which retries are attempted:

| Error Type / Status Code | Retry Logic |

|---|---|

| Network Connection Errors | Retry after marking endpoint unavailable and waiting for defaultBackoff. |

| 403 Forbidden (with specific substatuses) | Retry after marking endpoint unavailable and updating the endpoint manager. |

| 404 Not Found (specific substatus) | Retry by switching to another session or endpoint. |

| 503 Service Unavailable | Retry by switching to another preferred location. |

You can explore the source code in cosmos_client_retry_policy.go if you want to see the details of how the retry policy is implemented.

Let’s see some of these in action.

Non-Retriable Errors

When a request fails with a non-retriable error, the SDK does not retry the operation. This is useful for scenarios where the error indicates that the operation cannot succeed.

For example, here is a function that tries to read a database that does not exist.

func retryPolicy1() {

c, err := auth.GetClientWithDefaultAzureCredential("https://demodb.documents.azure.com:443/", nil)

if err != nil {

log.Fatal(err)

}

azlog.SetListener(func(cls azlog.Event, msg string) {

// Log retry-related events

switch cls {

case azlog.EventRetryPolicy:

fmt.Printf("Retry Policy Event: %s\n", msg)

}

})

// Set logging level to include retries

azlog.SetEvents(azlog.EventRetryPolicy)

db, err := c.NewDatabase("i_dont_exist")

if err != nil {

log.Fatal("NewDatabase call failed", err)

}

_, err = db.Read(context.Background(), nil)

if err != nil {

log.Fatal("Read call failed: ", err)

}

}The azcore logging implementation is configured using SetListener and SetEvents to write retry policy event logs to standard output.

See Logging section in

azcosmospackage README for details.

Let’s look at the logs generated when this code is run:

//....

Retry Policy Event: exit due to non-retriable status code

Retry Policy Event: =====> Try=1 for GET https://demodb.documents.azure.com:443/dbs/i_dont_exist

Retry Policy Event: response 404

Retry Policy Event: exit due to non-retriable status code

Read call failed: GET https://demodb-region.documents.azure.com:443/dbs/i_dont_exist

--------------------------------------------------------------------------------

RESPONSE 404: 404 Not Found

ERROR CODE: 404 Not Found

//...When a request is made to read a non-existent database, the SDK gets a 404 (not found) response for the database. This is recognized as a non-retriable error and the SDK stops retrying. Retries are only performed for retriable errors (like network issues or certain status codes). The operation failed because the database does not exist.

Retriable Errors

When a request fails with a retriable error, the SDK automatically retries the operation based on the retry policy. This is useful for transient errors that may resolve themselves after a few attempts.

This function tries to create a Cosmos DB client using an invalid account endpoint. It sets up logging for retry policy events and attempts to create a database.

func retryPolicy2() {

c, err := auth.GetClientWithDefaultAzureCredential("https://iamnothere.documents.azure.com:443/", nil)

if err != nil {

log.Fatal(err)

}

azlog.SetListener(func(cls azlog.Event, msg string) {

// Log retry-related events

switch cls {

case azlog.EventRetryPolicy:

fmt.Printf("Retry Policy Event: %s\n", msg)

}

})

// Set logging level to include retries

azlog.SetEvents(azlog.EventRetryPolicy)

_, err = c.CreateDatabase(context.Background(), azcosmos.DatabaseProperties{ID: "test"}, nil)

if err != nil {

log.Fatal(err)

}

}Let’s look at the logs generated when this code is run, and see show how the SDK handles retries when the endpoint is unreachable:

//....

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: End Try #1, Delay=682.644105ms

Retry Policy Event: =====> Try=2 for GET https://iamnothere.documents.azure.com:443/

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: End Try #2, Delay=2.343322179s

Retry Policy Event: =====> Try=3 for GET https://iamnothere.documents.azure.com:443/

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: End Try #3, Delay=7.177314269s

Retry Policy Event: =====> Try=4 for GET https://iamnothere.documents.azure.com:443/

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: MaxRetries 3 exceeded

failed to retrieve account properties: Get "https://iamnothere.documeEach failed attempt is logged, and the SDK retries the operation several times (three times to be specific), with increasing delays between attempts. After exceeding the maximum number of retries, the operation fails with an error indicating the host could not be found - the SDK automatically retries transient network errors before giving up.

But you don’t have to stick to the default retry policy. You can customize the retry policy by setting the azcore.ClientOptions when creating the Cosmos DB client.

Configurable Retries

Let’s say you want to set a custom retry policy with a maximum of two retries and a delay of one second between retries. You can do this by creating a policy.RetryOptions struct and passing it to the azcosmos.ClientOptions when creating the client.

func retryPolicy3() {

retryPolicy := policy.RetryOptions{

MaxRetries: 2,

RetryDelay: 1 * time.Second,

}

opts := azcosmos.ClientOptions{

ClientOptions: policy.ClientOptions{

Retry: retryPolicy,

},

}

c, err := auth.GetClientWithDefaultAzureCredential("https://iamnothere.documents.azure.com:443/", &opts)

if err != nil {

log.Fatal(err)

}

log.Println(c.Endpoint())

azlog.SetListener(func(cls azlog.Event, msg string) {

// Log retry-related events

switch cls {

case azlog.EventRetryPolicy:

fmt.Printf("Retry Policy Event: %s\n", msg)

}

})

azlog.SetEvents(azlog.EventRetryPolicy)

_, err = c.CreateDatabase(context.Background(), azcosmos.DatabaseProperties{ID: "test"}, nil)

if err != nil {

log.Fatal(err)

}

}Each failed attempt is logged, and the SDK retries the operation according to the custom policy — only two retries, with a 1-second delay after the first attempt and a longer delay after the second. After reaching the maximum number of retries, the operation fails with an error indicating the host could not be found.

Retry Policy Event: =====> Try=1 for GET https://iamnothere.documents.azure.com:443/

//....

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: End Try #1, Delay=1.211970493s

Retry Policy Event: =====> Try=2 for GET https://iamnothere.documents.azure.com:443/

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: End Try #2, Delay=3.300739653s

Retry Policy Event: =====> Try=3 for GET https://iamnothere.documents.azure.com:443/

Retry Policy Event: error Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

Retry Policy Event: MaxRetries 2 exceeded

failed to retrieve account properties: Get "https://iamnothere.documents.azure.com:443/": dial tcp: lookup iamnothere.documents.azure.com: no such host

exit status 1Note: The first attempt is not counted as a retry, so the total number of attempts is three (1 initial + 2 retries).

You can customize this further by implementing fault injection policies. This allows you to simulate various error scenarios for testing purposes.

Fault Injection

You can create custom policies to inject faults into the request pipeline. This is useful for testing how your application handles various error scenarios without needing to rely on actual network failures or service outages.

For example, you can create a custom policy that injects a fault into the request pipeline. Here, we use a custom policy (FaultInjectionPolicy) that simulates a network error on every request.

type FaultInjectionPolicy struct {

failureProbability float64 // e.g., 0.3 for 30% chance to fail

}

// Implement the Policy interface

func (f *FaultInjectionPolicy) Do(req *policy.Request) (*http.Response, error) {

if rand.Float64() < f.failureProbability {

// Simulate a network error

return nil, &net.OpError{

Op: "read",

Net: "tcp",

Err: errors.New("simulated network failure"),

}

}

// no failure - continue with the request

return req.Next()

}This function configures the Cosmos DB client to use this policy, sets up logging for retry events, and attempts to create a database.

func retryPolicy4() {

opts := azcosmos.ClientOptions{

ClientOptions: policy.ClientOptions{

PerRetryPolicies: []policy.Policy{&FaultInjectionPolicy{failureProbability: 0.6}},

},

}

c, err := auth.GetClientWithDefaultAzureCredential("https://ACCOUNT_NAME.documents.azure.com:443/", &opts) // Updated to use opts

if err != nil {

log.Fatal(err)

}

azlog.SetListener(func(cls azlog.Event, msg string) {

// Log retry-related events

switch cls {

case azlog.EventRetryPolicy:

fmt.Printf("Retry Policy Event: %s\n", msg)

}

})

// Set logging level to include retries

azlog.SetEvents(azlog.EventRetryPolicy)

_, err = c.CreateDatabase(context.Background(), azcosmos.DatabaseProperties{ID: "test_1"}, nil)

if err != nil {

log.Fatal(err)

}

}Take a look at the logs generated when this code is run - each request attempt fails due to the simulated network error. The SDK logs each retry, with increasing delays between attempts. After reaching the maximum number of retries (default = 3), the operation fails with an error indicating a simulated network failure.

Note: This can change depending on the failure probability you set in the

FaultInjectionPolicy. In this case, we set it to 0.6 (60% chance to fail), so you may see different results each time you run the code.

Retry Policy Event: =====> Try=1 for GET https://ACCOUNT_NAME.documents.azure.com:443/

//....

Retry Policy Event: MaxRetries 0 exceeded

Retry Policy Event: error read tcp: simulated network failure

Retry Policy Event: End Try #1, Delay=794.018648ms

Retry Policy Event: =====> Try=2 for GET https://ACCOUNT_NAME.documents.azure.com:443/

Retry Policy Event: error read tcp: simulated network failure

Retry Policy Event: End Try #2, Delay=2.374693498s

Retry Policy Event: =====> Try=3 for GET https://ACCOUNT_NAME.documents.azure.com:443/

Retry Policy Event: error read tcp: simulated network failure

Retry Policy Event: End Try #3, Delay=7.275038434s

Retry Policy Event: =====> Try=4 for GET https://ACCOUNT_NAME.documents.azure.com:443/

Retry Policy Event: error read tcp: simulated network failure

Retry Policy Event: MaxRetries 3 exceeded

Retry Policy Event: =====> Try=1 for GET https://ACCOUNT_NAME.documents.azure.com:443/

Retry Policy Event: error read tcp: simulated network failure

Retry Policy Event: End Try #1, Delay=968.457331ms

2025/05/05 19:53:50 failed to retrieve account properties: read tcp: simulated network failure

exit status 1Do take a look at Custom HTTP pipeline policies in the Azure SDK for Go documentation for more information on how to implement custom policies.

HTTP-level customizations

There are scenarios where you may need to customize the HTTP client used by the SDK. For example, when using the Cosmos DB emulator locally, you want to skip certificate verification to connect without SSL errors during development or testing.

TLSClientConfig allows you to customize TLS settings for the HTTP client and setting InsecureSkipVerify: true disables certificate verification – useful for local testing but insecure for production.

func customHTTP1() {

// Create a custom HTTP client with a timeout

client := &http.Client{

Transport: &http.Transport{

TLSClientConfig: &tls.Config{InsecureSkipVerify: true},

},

}

clientOptions := &azcosmos.ClientOptions{

ClientOptions: azcore.ClientOptions{

Transport: client,

},

}

c, err := auth.GetEmulatorClientWithAzureADAuth("http://localhost:8081", clientOptions)

if err != nil {

log.Fatal(err)

}

_, err = c.CreateDatabase(context.Background(), azcosmos.DatabaseProperties{ID: "test"}, nil)

if err != nil {

log.Fatal(err)

}

}All you need to do is pass the custom HTTP client to the ClientOptions struct when creating the Cosmos DB client. The SDK will use this for all requests.

Another scenario is when you want to set a custom header for all requests to track requests or add metadata. All you need to do is implement the Do method of the policy.Policy interface and set the header in the request:

type CustomHeaderPolicy struct{}

func (c *CustomHeaderPolicy) Do(req *policy.Request) (*http.Response, error) {

correlationID := uuid.New().String()

req.Raw().Header.Set("X-Correlation-ID", correlationID)

return req.Next()

}Looking at the logs, notice the custom header X-Correlation-ID is added to each request:

//...

Request Event: ==> OUTGOING REQUEST (Try=1)

GET https://ACCOUNT_NAME.documents.azure.com:443/

Authorization: REDACTED

User-Agent: azsdk-go-azcosmos/v1.3.0 (go1.23.6; darwin)

X-Correlation-Id: REDACTED

X-Ms-Cosmos-Sdk-Supportedcapabilities: 1

X-Ms-Date: Tue, 06 May 2025 04:27:37 GMT

X-Ms-Version: 2020-11-05

Request Event: ==> OUTGOING REQUEST (Try=1)

POST https://ACCOUNT_NAME-region.documents.azure.com:443/dbs

Authorization: REDACTED

Content-Length: 27

Content-Type: application/query+json

User-Agent: azsdk-go-azcosmos/v1.3.0 (go1.23.6; darwin)

X-Correlation-Id: REDACTED

X-Ms-Cosmos-Sdk-Supportedcapabilities: 1

X-Ms-Date: Tue, 06 May 2025 04:27:37 GMT

X-Ms-Documentdb-Query: True

X-Ms-Version: 2020-11-05

//....Query and Index Metrics

The Go SDK provides a way to access query and index metrics, which can help you optimize your queries and understand their performance characteristics.

Query Metrics

When executing queries, you can get basic metrics about the query execution. The Go SDK provides a way to access these metrics through the QueryResponse struct in the QueryItemsResponse object. This includes information about the query execution, including the number of documents retrieved, etc.

func queryMetrics() {

//....

container, err := c.NewContainer("existing_db", "existing_container")

if err != nil {

log.Fatal(err)

}

query := "SELECT * FROM c"

pager := container.NewQueryItemsPager(query, azcosmos.NewPartitionKey(), nil)

for pager.More() {

queryResp, err := pager.NextPage(context.Background())

if err != nil {

log.Fatal("query items failed:", err)

}

log.Println("query metrics:\n", *queryResp.QueryMetrics)

//....

}

}The query metrics are provided as a simple raw string in a key-value format (semicolon-separated), which is very easy to parse. Here is an example:

totalExecutionTimeInMs=0.34;queryCompileTimeInMs=0.04;queryLogicalPlanBuildTimeInMs=0.00;queryPhysicalPlanBuildTimeInMs=0.02;queryOptimizationTimeInMs=0.00;VMExecutionTimeInMs=0.07;indexLookupTimeInMs=0.00;instructionCount=41;documentLoadTimeInMs=0.04;systemFunctionExecuteTimeInMs=0.00;userFunctionExecuteTimeInMs=0.00;retrievedDocumentCount=9;retrievedDocumentSize=1251;outputDocumentCount=9;outputDocumentSize=2217;writeOutputTimeInMs=0.02;indexUtilizationRatio=1.00Here is a breakdown of the metrics you can obtain from the query response:

| Metric | Unit | Description |

| ------------------------------ | ----- | ------------------------------------------------------------ |

| totalExecutionTimeInMs | ms | Total time taken to execute the query, including all phases. |

| queryCompileTimeInMs | ms | Time spent compiling the query. |

| queryLogicalPlanBuildTimeInMs | ms | Time spent building the logical plan for the query. |

| queryPhysicalPlanBuildTimeInMs | ms | Time spent building the physical plan for the query. |

| queryOptimizationTimeInMs | ms | Time spent optimizing the query. |

| VMExecutionTimeInMs | ms | Time spent executing the query in the Cosmos DB VM. |

| indexLookupTimeInMs | ms | Time spent looking up indexes. |

| instructionCount | count | Number of instructions executed for the query. |

| documentLoadTimeInMs | ms | Time spent loading documents from storage. |

| systemFunctionExecuteTimeInMs | ms | Time spent executing system functions in the query. |

| userFunctionExecuteTimeInMs | ms | Time spent executing user-defined functions in the query. |

| retrievedDocumentCount | count | Number of documents retrieved by the query. |

| retrievedDocumentSize | bytes | Total size of documents retrieved. |

| outputDocumentCount | count | Number of documents returned as output. |

| outputDocumentSize | bytes | Total size of output documents. |

| writeOutputTimeInMs | ms | Time spent writing the output. |

| indexUtilizationRatio | ratio | Ratio of index utilization (1.0 means fully utilized). |Index Metrics

Indexing metrics shows both utilized indexed paths and recommended indexed paths. You can use the indexing metrics to optimize query performance, especially in cases where you aren’t sure how to modify the indexing policy.

To enable indexing metrics in Go SDK, set PopulateIndexMetrics to true in the QueryOptions. Index metrics data in the QueryItemsResponse is base64 encoded and needs to be decoded before they can be used.

Enabling indexing metrics incurs overhead, so it should be done only when debugging slow queries and not recommended in production.

pager := container.NewQueryItemsPager("SELECT c.id FROM c WHERE CONTAINS(LOWER(c.description), @word)", azcosmos.NewPartitionKey(), &azcosmos.QueryOptions{

PopulateIndexMetrics: true,

QueryParameters: []azcosmos.QueryParameter{

{

Name: "@word",

Value: "happy",

},

},

})

if pager.More() {

page, _ := pager.NextPage(context.Background())

// process results

decoded, _ := base64.StdEncoding.DecodeString(*page.IndexMetrics)

log.Println("Index metrics", string(decoded))

}Once decoded, the index metrics are available in JSON format. For example:

{

"UtilizedSingleIndexes": [

{

"FilterExpression": "",

"IndexSpec": "/description/?",

"FilterPreciseSet": true,

"IndexPreciseSet": true,

"IndexImpactScore": "High"

}

],

"PotentialSingleIndexes": [],

"UtilizedCompositeIndexes": [],

"PotentialCompositeIndexes": []

}OpenTelemetry support

The Azure Go SDK supports distributed tracing via OpenTelemetry. This allows you to collect, export, and analyze traces for requests made to Azure services, including Cosmos DB.

The azotel package is used to connect an instance of OpenTelemetry’s TracerProvider to an Azure SDK client (in this case Cosmos DB). You can then configure the TracingProvider in azcore.ClientOptions to enable automatic propagation of trace context and emission of spans for SDK operations.

func getClientOptionsWithTracing() (*azcosmos.ClientOptions, *trace.TracerProvider) {

exporter, err := stdouttrace.New(stdouttrace.WithPrettyPrint())

if err != nil {

log.Fatalf("failed to initialize stdouttrace exporter: %v", err)

}

tp := trace.NewTracerProvider(trace.WithBatcher(exporter))

otel.SetTracerProvider(tp)

op := azcosmos.ClientOptions{

ClientOptions: policy.ClientOptions{

TracingProvider: azotel.NewTracingProvider(tp, nil),

},

}

return &op, tp

}The above function creates a stdout exporter for OpenTelemetry (prints traces to the console). It sets up a TracerProvider, registers this as the global tracer, and returns a ClientOptions struct with the TracingProvider set, ready to be used with the Cosmos DB client.

func tracing() {

op, tp := getClientOptionsWithTracing()

defer func() { _ = tp.Shutdown(context.Background()) }()

c, err := auth.GetClientWithDefaultAzureCredential("https://ACCOUNT_NAME.documents.azure.com:443/", op)

//....

container, err := c.NewContainer("existing_db", "existing_container")

if err != nil {

log.Fatal(err)

}

//ctx := context.Background()

tracer := otel.Tracer("tracer_app1")

ctx, span := tracer.Start(context.Background(), "query-items-operation")

defer span.End()

query := "SELECT * FROM c"

pager := container.NewQueryItemsPager(query, azcosmos.NewPartitionKey(), nil)

for pager.More() {

queryResp, err := pager.NextPage(ctx)

if err != nil {

log.Fatal("query items failed:", err)

}

for _, item := range queryResp.Items {

log.Printf("Queried item: %+v\n", string(item))

}

}

}The above function calls getClientOptionsWithTracing to get tracing-enabled options and a tracer provider and ensures the tracer provider is shut down at the end (flushes traces). It creates a Cosmos DB client with tracing enabled, executes an operation to query items in a container. The SDK call is traced automatically, and exported to stdout in this case.

You can plug in any OpenTelemetry-compatible tracer provider and traces can be exported to various backend. Here is a snippet for Jaeger exporter.

The traces are quite large, so here is a small snippet of the trace output. Check the query_items_trace.txt file in the repo for the full trace output:

//...

{

"Name": "query_items democontainer",

"SpanContext": {

"TraceID": "39a650bcd34ff70d48bbee467d728211",

"SpanID": "f2c892bec75dbf5d",

"TraceFlags": "01",

"TraceState": "",

"Remote": false

},

"Parent": {

"TraceID": "39a650bcd34ff70d48bbee467d728211",

"SpanID": "b833d109450b779b",

"TraceFlags": "01",

"TraceState": "",

"Remote": false

},

"SpanKind": 3,

"StartTime": "2025-05-06T17:59:30.90146+05:30",

"EndTime": "2025-05-06T17:59:36.665605042+05:30",

"Attributes": [

{

"Key": "db.system",

"Value": {

"Type": "STRING",

"Value": "cosmosdb"

}

},

{

"Key": "db.cosmosdb.connection_mode",

"Value": {

"Type": "STRING",

"Value": "gateway"

}

},

{

"Key": "db.namespace",

"Value": {

"Type": "STRING",

"Value": "demodb-gosdk3"

}

},

{

"Key": "db.collection.name",

"Value": {

"Type": "STRING",

"Value": "democontainer"

}

},

{

"Key": "db.operation.name",

"Value": {

"Type": "STRING",

"Value": "query_items"

}

},

{

"Key": "server.address",

"Value": {

"Type": "STRING",

"Value": "ACCOUNT_NAME.documents.azure.com"

}

},

{

"Key": "az.namespace",

"Value": {

"Type": "STRING",

"Value": "Microsoft.DocumentDB"

}

},

{

"Key": "db.cosmosdb.request_charge",

"Value": {

"Type": "STRING",

"Value": "2.37"

}

},

{

"Key": "db.cosmosdb.status_code",

"Value": {

"Type": "INT64",

"Value": 200

}

}

],

//....Conclusion

The Go SDK for Azure Cosmos DB is designed to be flexible and customizable, allowing you to tailor it to your specific needs.In this blog, we covered how to configure and customize the Go SDK for Azure Cosmos DB. We looked at retry policies, HTTP-level customizations, OpenTelemetry support, and how to access metrics.

For more information, refer to the package documentation and the GitHub repository. I hope you find this useful!