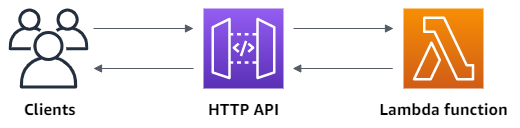

A combination of AWS Lambda and Amazon API Gateway is a widely-used architecture for serverless microservices and API based solutions. They enable developers to focus on their applications, instead of spending time provisioning and managing servers.

API Gateway is a feature rich offering that includes with support for different API types (HTTP, REST, WebSocket), multiple authentication schemes, API versioning, canary deployments and much more! However, if your requirements are simpler and all you need is an HTTP(S) endpoint for your Lambda function (for example, to serve as a webhook), you can use Lambda Function URLs! When you create a function URL, Lambda automatically generates a unique HTTP(S) endpoint that is dedicated for your Lambda function.

[Read More]